Turing test

The Turing test is a test of a machine's ability to demonstrate intelligence. It proceeds as follows: a human judge engages in a natural language conversation with one human and one machine, each of which tries to appear human. All participants are placed in isolated locations. If the judge cannot reliably tell the machine from the human, the machine is said to have passed the test. In order to test the machine's intelligence rather than its ability to render words into audio, the conversation is limited to a text-only channel such as a computer keyboard and screen.[2]

The test was proposed by Alan Turing in his 1950 paper Computing Machinery and Intelligence, which opens with the words: "I propose to consider the question, 'Can machines think?'" Since "thinking" is difficult to define, Turing chooses to "replace the question by another, which is closely related to it and is expressed in relatively unambiguous words."[3] Turing's new question is: "Are there imaginable digital computers which would do well in the [Turing test]"?[4] This question, Turing believed, is one that can actually be answered. In the remainder of the paper, he argued against all the major objections to the proposition that "machines can think".[5]

In the years since 1950, the test has proven to be both highly influential and widely criticized, and it is an essential concept in the philosophy of artificial intelligence.[6][7] To date, there are no machines that can convincingly pass the test.[8]

Contents |

History

Philosophical background

The question of whether it is possible for machines to think has a long history, which is firmly entrenched in the distinction between dualist and materialist views of the mind. From the perspective of dualism, the mind is non-physical (or, at the very least, has non-physical properties)[9] and, therefore, cannot be explained in purely physical terms. The materialist perspective argues that the mind can be explained physically, and thus leaves open the possibility of minds that are artificially produced.[10]

In 1936, philosopher Alfred Ayer considered the standard philosophical question of other minds: how do we know that other people have the same conscious experiences that we do? In his book Language, Truth and Logic Ayer suggested a protocol to distinguish between a conscious man and an unconscious machine: "The only ground I can have for asserting that an object which appears to be conscious is not really a conscious being, but only a dummy or a machine, is that it fails to satisfy one of the empirical tests by which the presence or absence of consciousness is determined."[11] (This suggestion is very similar to the Turing test, but it is not certain that Ayer's popular philosophical classic was familiar to Turing.)

Alan Turing

Researchers in the United Kingdom had been exploring "machine intelligence" for up to ten years prior to the founding of the field of AI research in 1956.[12] It was a common topic among the members of the Ratio Club, an informal group of British cybernetics and electronics researchers that included Alan Turing, after whom the test is named.[13]

Turing, in particular, had been tackling the notion of machine intelligence since at least 1941,[14] and one of the earliest-known mentions of "computer intelligence" was made by him in 1947.[15] In Turing's report, "Intelligent Machinery," he investigated "the question of whether or not it is possible for machinery to show intelligent behaviour"[16] and, as part of that investigation, proposed what may be considered the forerunner to his later tests:

It is not difficult to devise a paper machine which will play a not very bad game of chess.[17] Now get three men as subjects for the experiment. A, B and C. A and C are to be rather poor chess players, B is the operator who works the paper machine. ... Two rooms are used with some arrangement for communicating moves, and a game is played between C and either A or the paper machine. C may find it quite difficult to tell which he is playing.

Thus, by the time Turing published "Computing Machinery and Intelligence," he had been considering the possibility of artificial intelligence for many years, though this was the first published paper[18] by Turing to focus exclusively on the notion.

Turing begins his 1950 paper with the claim "I propose to consider the question 'Can machines think?'"[3] As he highlights, the traditional approach to such a question is to start with definitions, defining both the terms "machine" and "intelligence." Turing chooses not to do so; instead, he replaces the question with a new one, "which is closely related to it and is expressed in relatively unambiguous words."[3] In essence, he proposes to change the question from "Do machines think?" to "Can machines do what we (as thinking entities) can do?"[19] The advantage of the new question, Turing argues, is that it draws "a fairly sharp line between the physical and intellectual capacities of a man."[20]

To demonstrate this approach, Turing proposes a test inspired by a party game known as the "Imitation Game," in which a man and a woman go into separate rooms, and guests try to tell them apart by writing a series of questions and reading the typewritten answers sent back. In this game, both the man and the woman aim to convince the guests that they are the other. Turing proposes recreating the game as follows:

We now ask the question, "What will happen when a machine takes the part of A in this game?" Will the interrogator decide wrongly as often when the game is played like this as he does when the game is played between a man and a woman? These questions replace our original, "Can machines think?"[20]

Later in the paper, Turing suggests an "equivalent" alternative formulation involving a judge conversing only with a computer and a man.[21] While neither of these formulations precisely matches the version of the Turing Test that is more generally known today, he proposed a third in 1952. In this version, which Turing discussed in a BBC radio broadcast, a jury asks questions of a computer, and the role of the computer is to make a significant proportion of the jury believe that it is really a man.[22]

Turing's paper considered nine putative objections, which include all the major arguments against artificial intelligence that have been raised in the years since the paper was published. (See Computing Machinery and Intelligence.)[5]

ELIZA and PARRY

Blay Whitby lists four major turning points in the history of the Turing Test — the publication of "Computing Machinery and Intelligence" in 1950, the announcement of Joseph Weizenbaum's ELIZA in 1966, Kenneth Colby's creation of PARRY, which was first described in 1972, and the Turing Colloquium in 1990.[23] Sixty years following its introduction, and continued argument over Turing's 'can machines think?' experiment, led to its reconsideration for the 21st century through the AISB's 'Towards a comprehensive intelligence test' symposium, 29 March - 1 April 2010, at De Montford University, UK.

ELIZA works by examining a user's typed comments for keywords. If a keyword is found, a rule that transforms the user's comments is applied, and the resulting sentence is returned. If a keyword is not found, ELIZA responds either with a generic riposte or by repeating one of the earlier comments.[24] In addition, Weizenbaum developed ELIZA to replicate the behaviour of a Rogerian psychotherapist, allowing ELIZA to be "free to assume the pose of knowing almost nothing of the real world."[25] With these techniques, Weizenbaum's program was able to fool some people into believing that they were talking to a real person, with some subjects being "very hard to convince that ELIZA [...] is not human."[25] Thus, ELIZA is claimed by some to be one of the programs (perhaps the first) able to pass the Turing Test,[25][26] although this view is highly contentious (see below).

Colby's PARRY has been described as "ELIZA with attitude":[27] it attempts to model the behaviour of a paranoid schizophrenic, using a similar (if more advanced) approach to that employed by Weizenbaum. In order to validate the work, PARRY was tested in the early 1970s using a variation of the Turing Test. A group of experienced psychiatrists analysed a combination of real patients and computers running PARRY through teletype machines. Another group of 33 psychiatrists were shown transcripts of the conversations. The two groups were then asked to identify which of the "patients" were human and which were computer programs.[28] The psychiatrists were able to make the correct identification only 48 per cent of the time — a figure consistent with random guessing.[29]

In the 21st century, ELIZA and PARRY have been developed into malware systems, such as CyberLover, which preys on Internet users convincing them to "reveal information about their identities or to lead them to visit a web site that will deliver malicious content to their computers" ((iTWire, 2007). A one-trick pony, CyberLover, a software program developed in Russia, has emerged as a "Valentine-risk" flirting with people "seeking relationships online in order to collect their personal data" (V3, 2010).

The Chinese room

John Searle's 1980 paper Minds, Brains, and Programs proposed an argument against the Turing Test known as the "Chinese room" thought experiment. Searle argued that software (such as ELIZA) could pass the Turing Test simply by manipulating symbols of which they had no understanding. Without understanding, they could not be described as "thinking" in the same sense people do. Therefore—Searle concludes—the Turing Test cannot prove that a machine can think.[30]

Arguments such as that proposed by Searle and others working on the philosophy of mind sparked off a more intense debate about the nature of intelligence, the possibility of intelligent machines and the value of the Turing test that continued through the 1980s and 1990s.[31]

Turing Colloquium

1990 Was the fortieth anniversary of the first publication of Turing's "Computing Machinery and Intelligence" paper, and, thus, saw renewed interest in the test. Two significant events occurred in that year: The first was the Turing Colloquium, which was held at the University of Sussex in April, and brought together academics and researchers from a wide variety of disciplines to discuss the Turing Test in terms of its past, present, and future; the second was the formation of the annual Loebner Prize competition. However, after nineteen Loebner Prize competitions, the contest is not viewed as contributing toward the science of machine intelligence, nor palliating the controversy surrounding the usefulness of Turing's test.

Loebner Prize

The Loebner Prize provides an annual platform for practical Turing Tests with the first competition held in November, 1991.[32] It is underwritten by Hugh Loebner; the Cambridge Center for Behavioral Studies in Massachusetts, United States organised the Prizes up to and including the 2003 contest. As Loebner described it, one reason the competition was created is to advance the state of AI research, at least in part, because no one had taken steps to implement the Turing Test despite 40 years of discussing it [3].

The first Loebner Prize competition in 1991 led to a renewed discussion of the viability of the Turing Test and the value of pursuing it, in both the popular press[33] and in academia.[34] The first contest was won by a mindless program with no identifiable intelligence that managed to fool naive interrogators into making the wrong identification. This highlighted several of the shortcomings of Turing test (discussed below): The winner won, at least in part, because it was able to "imitate human typing errors";[33] the unsophisticated interrogators were easily fooled;[34] and some researchers in AI have been led to feel that the test is merely a distraction from more fruitful research.[35]

The silver (text only) and gold (audio and visual) prizes have never been won. However, the competition has awarded the bronze medal every year for the computer system that, in the judges' opinions, demonstrates the "most human" conversational behavior among that year's entries. Artificial Linguistic Internet Computer Entity (A.L.I.C.E.) has won the bronze award on three occasions in recent times (2000, 2001, 2004). Learning AI Jabberwacky won in 2005 and 2006. Its creators have proposed a personalized variation: the ability to pass the imitation test while attempting specifically to imitate the human player, with whom the machine will have conversed at length before the test.[36] Huma Shah and Kevin Warwick have discussed Jabberwacky's performance in Emotion in the Turing Test, chapter in Handbook on Synthetic Emotions and Sociable Robotics: New Applications in Affective Computing and Artificial Intelligence [37].

The Loebner Prize tests conversational intelligence; winners are typically chatterbot programs, or Artificial Conversational Entities (ACE)s. Early Loebner Prize rules restricted conversations: Each entry and hidden-human conversed on a single topic, thus the interrogators were restricted to one line of questioning per entity interaction. The restricted conversation rule was lifted for the 1995 Loebner Prize. Interaction duration between judge and entity has varied in Loebner Prizes. In Loebner 2003, at the University of Surrey, each interrogator was allowed five minutes to interact with an entity, machine or hidden-human. Between 2004 and 2007, the interaction time allowed in Loebner Prizes was more than twenty minutes. In 2008, the interrogation duration allowed was five minutes per pair, because the organiser, Kevin Warwick, and coordinator, Huma Shah, consider this to be the duration for any test, as Turing stated in his 1950 paper: " ..making the right identification after five minutes of questioning" (p. 442). They felt Loebner's longer test, implemented in Loebner Prizes 2006 and 2007, was inappropriate for the state of artificial conversation technology.[38] It is ironic that the 2008 winning entry, Elbot, does not mimic a human; its personality is that of a robot, yet Elbot deceived three human judges that it was the human during human-parallel comparisons; see Shah & Warwick (2009a):Testing Turing’s five minutes, parallel-paired imitation game in (forthcoming) Kybernetes Turing Test Special Issue.[39]

During the 2009 competition, held in Brighton, UK, the communication program restricted judges to 10 minutes for each round, 5 minutes to converse with the human, 5 minutes to converse with the program. This was to test the alternative reading of Turing's prediction that the 5-minute interaction was to be with the computer. For the 2010 competition, the Sponsor has again increased the interaction time, between interrogator and system, to 25 minutes (Rules for the 20th Loebner Prize contest).

2005 Colloquium on Conversational Systems

In November 2005, the University of Surrey hosted an inaugural one-day meeting of artificial conversational entity developers,[40] attended by winners of practical Turing Tests in the Loebner Prize: Robby Garner, Richard Wallace and Rollo Carpenter. Invited speakers included David Hamill, Hugh Loebner (sponsor of the Loebner Prize) and Huma Shah.

AISB 2008 Symposium on the Turing Test

In parallel to the 2008 Loebner Prize held at the University of Reading,[41] the Society for the Study of Artificial Intelligence and the Simulation of Behaviour (AISB), hosted a one-day symposium to discuss the Turing Test, organised by John Barnden, Mark Bishop, Huma Shah and Kevin Warwick.[42] The Speakers included Royal Institution's Director Baroness Susan Greenfield, Selmer Bringsjord, Turing's biographer Andrew Hodges, and consciousness scientist Owen Holland. No agreement emerged for a canonical Turing Test, though Bringsjord expressed that a sizeable prize would result in the Turing Test being passed sooner.

The Alan Turing Year, and Turing100 in 2012

2012 Will see a celebration of Turing’s life and scientific impact, with a number of major events taking place throughout the year. Most of these will be linked to places with special significance in Turing’s life, such as Cambridge, Manchester, and Bletchley Park. The Alan Turing Year is coordinated by the Turing Centenary Advisory Committee (TCAC), representing a range of expertise and organisational involvement in the 2012 celebrations. Supporting organisations for the Alan Turing Year include the ACM, the ASL, the SSAISB, the BCS, the BCTCS, Bletchley Park, the BMC, the BLC, the CCS, the Association CiE, the EACSL, the EATCS, FoLLI, IACAP, the IACR, the KGS, and LICS.

Supporting TCAC is Turing100. With the aim of taking Turing's idea for a thinking machine, picturised in Hollywood movies such as Blade Runner, to a wider audience including children, Turing100 is set up to organise a special Turing test event, celebrating the 100th anniversary of Turing's birth in June 2012, at the place where the mathematician broke codes during the Second World War: Bletchley Park. The Turing100 team comprises Kevin Warwick (Chair), Huma Shah (coordinator), Ian Bland, Chris Chapman, Marc Allen; supporters include Rory Dunlop, Loebner winners Robby Garner, and Fred Roberts.

Versions of the Turing test

There are at least three primary versions of the Turing test, two of which are offered in "Computing Machinery and Intelligence" and one that Saul Traiger describes as the "Standard Interpretation."[44] While there is some debate regarding whether the "Standard Interpretation" is that described by Turing or, instead, based on a misreading of his paper, these three versions are not regarded as equivalent,[44] and their strengths and weaknesses are distinct [45].

The Imitation Game

Turing's original game, as we have seen, described a simple party game involving three players. Player A is a man, player B is a woman and player C (who plays the role of the interrogator) is of either sex. In the Imitation Game, player C is unable to see either player A or player B, and can only communicate with them through written notes. By asking questions of player A and player B, player C tries to determine which of the two is the man and which is the woman. Player A's role is to trick the interrogator into making the wrong decision, while player B attempts to assist the interrogator in making the right one [46].

Sterret refers to this as the "Original Imitation Game Test,"[47] Turing proposes that the role of player A be filled by a computer. Thus, the computer's task is to pretend to be a woman and attempt to trick the interrogator into making an incorrect evaluation. The success of the computer is determined by comparing the outcome of the game when player A is a computer against the outcome when player A is a man. If, as Turing puts it, "the interrogator decide[s] wrongly as often when the game is played [with the computer] as he does when the game is played between a man and a woman",[20] it may be argued that the computer is intelligent. Shah & Warwick, in Testing Turing's Five Minutes Parallel-paired Imitation Game (Kybernetes, April 2010,) and in contrast to Sterrett's opinion, posit that Turing did not expect the design of the machine to imitate a woman, when compared against a human.

.

The second version appears later in Turing's 1950 paper. As with the Original Imitation Game Test, the role of player A is performed by a computer, the difference being that the role of player B is now to be performed by a man rather than a woman.

"Let us fix our attention on one particular digital computer C. Is it true that by modifying this computer to have an adequate storage, suitably increasing its speed of action, and providing it with an appropriate programme, C can be made to play satisfactorily the part of A in the imitation game, the part of B being taken by a man?"[20]

In this version, both player A (the computer) and player B are trying to trick the interrogator into making an incorrect decision.

The standard interpretation

Common understanding has it that the purpose of the Turing Test is not specifically to determine whether a computer is able to fool an interrogator into believing that it is a human, but rather whether a computer could imitate a human [49]. While there is some dispute whether this interpretation was intended by Turing — Sterrett believes that it was[47] and thus conflates the second version with this one, while others, such as Traiger, do not[44] — this has nevertheless led to what can be viewed as the "standard interpretation." In this version, player A is a computer and player B a person of either gender. The role of the interrogator is not to determine which is male and which is female, but which is a computer and which is a human.[50]

Imitation Game vs. Standard Turing Test

There has arisen some controversy over which of the alternative formulations of the test Turing intended.[47] Sterrett argues that two distinct tests can be extracted from his 1950 paper and that, pace Turing's remark, they are not equivalent. The test that employs the party game and compares frequencies of success is referred to as the "Original Imitation Game Test," whereas the test consisting of a human judge conversing with a human and a machine is referred to as the "Standard Turing Test," noting that Sterrett equates this with the "standard interpretation" rather than the second version of the imitation game. Sterrett agrees that the Standard Turing Test (STT) has the problems that its critics cite but feels that, in contrast, the Original Imitation Game Test (OIG Test) so defined is immune to many of them, due to a crucial difference: Unlike the STT, it does not make similarity to human performance the criterion, even though it employs human performance in setting a criterion for machine intelligence. A man can fail the OIG Test, but it is argued that it is a virtue of a test of intelligence that failure indicates a lack of resourcefulness: The OIG Test requires the resourcefulness associated with intelligence and not merely "simulation of human conversational behaviour." The general structure of the OIG Test could even be used with non-verbal versions of imitation games.[51]

Still other writers[52] have interpreted Turing as proposing that the imitation game itself is the test, without specifying how to take into account Turing's statement that the test that he proposed using the party version of the imitation game is based upon a criterion of comparative frequency of success in that imitation game, rather than a capacity to succeed at one round of the game.

Saygin has suggested that maybe the original game is a way of proposing a less biased experimental design as it hides the participation of the computer [53].

Should the interrogator know about the computer?

Turing never makes clear whether the interrogator in his tests is aware that one of the participants is a computer. To return to the Original Imitation Game, he states only that player A is to be replaced with a machine, not that player C is to be made aware of this replacement.[20] When Colby, FD Hilf, S Weber and AD Kramer tested PARRY, they did so by assuming that the interrogators did not need to know that one or more of those being interviewed was a computer during the interrogation.[54] As Ayse Saygin and others have highlighted, this makes a big difference to the implementation and outcome of the test [55]. Huma Shah & Kevin Warwick, who have organised practical Turing tests, argue knowing/not knowing may make a difference in some judges' verdict. Judges in the finals of the parallel-paired Turing tests, staged in the 18th Loebner Prize were not explicitly told, some did assume each hidden pair contained one human and one machine. Spelling errors gave away the hidden-humans; machines were identified by 'speed of response' and lengthier utterances.[56]. In an experimental study looking at Gricean maxim violations that also used the Loebner transcripts, Ayse Saygin found significant differences between the responses of participants who knew and did not know about computers being involved [57].

Strengths of the test

Tractability

The philosophy of mind, psychology, and modern neuroscience have been unable to provide definitions of "intelligence" and "thinking" that are sufficiently precise and general to be applied to machines. Without such definitions, the central questions of the philosophy of artificial intelligence cannot be answered. The Turing test, even if imperfect, at least provides something that can actually be measured. As such, it is a pragmatic solution to a difficult philosophical question.

Breadth of subject matter

The power of the Turing test derives from the fact that it is possible to talk about anything. Turing wrote that "the question and answer method seems to be suitable for introducing almost any one of the fields of human endeavor that we wish to include."[58] John Haugeland adds that "understanding the words is not enough; you have to understand the topic as well."[59]

In order to pass a well-designed Turing test, the machine must use natural language, reason, have knowledge and learn. The test can be extended to include video input, as well as a "hatch" through which objects can be passed: this would force the machine to demonstrate the skill of vision and robotics as well. Together, these represent almost all of the major problems of artificial intelligence.[60]

The Feigenbaum test is designed to take advantage of the broad range of topics available to a Turing test. It compares the machine against the abilities of experts in specific fields such as literature or chemistry.

Weaknesses of the test

The Turing test is based on the assumption that human beings can judge a machine's intelligence by comparing its behaviour with human behaviour. Every element of this assumption has been questioned: the human's judgement, the value of comparing only behaviour and the value of comparing against a human. Because of these and other considerations, some AI researchers have questioned the usefulness of the test.

Human intelligence vs intelligence in general

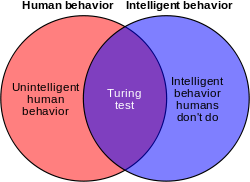

It does not directly test if the computer behaves intelligently, it tests only whether the computer behaves like a human being. Since human behavior and intelligent behavior are not exactly the same thing, the test can fail to accurately measure intelligence in two ways:

- Some human behavior is unintelligent

- The Turing test requires that the machine be able to execute all human behaviors, regardless of whether they are intelligent. It even tests for behaviors that we may not consider intelligent at all, such as the susceptibility to insults [61], the temptation to lie or, simply, a high frequency of typing mistakes. If a machine cannot imitate human behavior in detail, it fails the test.

- This objection was raised by The Economist, in an article entitled "Artificial Stupidity" published shortly after the first Loebner prize competition in 1992. The article noted that the first Loebner winner's victory was due, at least in part, to its ability to "imitate human typing errors."[33] Turing himself had suggested that programs add errors into their output, so as to be better "players" of the game.[62]

- Some intelligent behavior is inhuman

- The Turing test does not test for highly intelligent behaviors, such as the ability to solve difficult problems or come up with original insights. In fact, it specifically requires deception on the part of the machine: if the machine is more intelligent than a human being it must deliberately avoid appearing too intelligent. If it were to solve a computational problem that is impossible for any human to solve, then the interrogator would know the program is not human, and the machine would fail the test.

Real intelligence vs simulated intelligence

It tests only how the subject acts — the external behaviour of the machine. In this regard, it assumes a behaviourist or functionalist definition of intelligence. The example of ELIZA suggested that a machine passing the test may be able to simulate human conversational behavior by following a simple (but large) list of mechanical rules, without thinking or having a mind at all.

John Searle argued that external behavior cannot be used to determine if a machine is "actually" thinking or merely "simulating thinking."[30] His chinese room argument is intended to show that, even if the Turing test is a good operational definition of intelligence, it may not indicate that the machine has a mind, consciousness, or intentionality. (Intentionality is a philosophical term for the power of thoughts to be "about" something.)

Turing anticipated to this line of criticism in his original paper,[63] writing that:

I do not wish to give the impression that I think there is no mystery about consciousness. There is, for instance, something of a paradox connected with any attempt to localise it. But I do not think these mysteries necessarily need to be solved before we can answer the question with which we are concerned in this paper.— Alan Turing, (Turing 1950)

Naivete of interrogators and the anthropomorphic fallacy

The Turing test assumes that the interrogator is sophisticated enough to determine the difference between the behaviour of a machine and the behaviour of a human being, though critics argue that this is not a skill most people have. The precise skills and knowledge required by the interrogator are not specified by Turing in his description of the test, but he did use the term "average interrogator": '.. average interrogator would not have more than 70 per cent chance of making the right identification after five minutes of questioning' (Turing, 1950: p. 442). Shah & Warwick (2009c) show that experts are fooled, and that interrogator strategy, 'power' vs 'solidarity' affects correct identification, the latter being more successful (in Hidden Interlocutor Misidentification in Practical Turing Tests submitted to journal, November 2009).

Chatterbot programs such as ELIZA have repeatedly fooled unsuspecting people into believing that they are communicating with human beings. In these cases, the "interrogator" is not even aware of the possibility that they are interacting with a computer. To successfully appear human, there is no need for the machine to have any intelligence whatsoever and only a superficial resemblance to human behaviour is required. Most would agree that a "true" Turing test has not been passed in "uninformed" situations like these.

Early Loebner prize competitions used "unsophisticated" interrogators who were easily fooled by the machines.[34] Since 2004, the Loebner Prize organizers have deployed philosophers, computer scientists, and journalists among the interrogators. Some of these have been deceived by machines, see Shah & Warwick (2009a): Testing Turing’s five minutes, parallel-paired imitation game in (forthcoming) Kybernetes Turing Test Special Issue.

Michael Shermer points out that human beings consistently choose to consider non-human objects as human whenever they are allowed the chance, a mistake called the anthropomorphic fallacy: They talk to their cars, ascribe desire and intentions to natural forces (e.g., "nature abhors a vacuum"), and worship the sun as a human-like being with intelligence. If the Turing test is applied to religious objects, Shermer argues, then, that inanimate statues, rocks, and places have consistently passed the test throughout history.[64] This human tendency towards anthropomorphism effectively lowers the bar for the Turing test, unless interrogators are specifically trained to avoid it.

Impracticality and irrelevance: the Turing test and AI research

Mainstream AI researchers argue that trying to pass the Turing Test is merely a distraction from more fruitful research.[35] Indeed, the Turing test is not an active focus of much academic or commercial effort—as Stuart Russell and Peter Norvig write: "AI researchers have devoted little attention to passing the Turing test."[65] There are several reasons.

First, there are easier ways to test their programs. Most current research in AI-related fields is aimed at modest and specific goals, such as automated scheduling, object recognition, or logistics. In order to test the intelligence of the programs that solve these problems, AI researchers simply give them the task directly, rather than going through the roundabout method of posing the question in a chat room populated with computers and people.

Second, creating life-like simulations of human beings is a difficult problem on its own that does not need to be solved to achieve the basic goals of AI research. Believable human characters may be interesting in a work of art, a game, or a sophisticated user interface, but they are not part of the science of creating intelligent machines, that is, machines that solve problems using intelligence. Russell and Norvig suggest an analogy with the history of flight: Planes are tested by how well they fly, not by comparing them to birds. "Aeronautical engineering texts," they write, "do not define the goal of their field as 'making machines that fly so exactly like pigeons that they can fool other pigeons.'"[65]

Third, if Turing-test level intelligence is ever achieved, the test will be of no use in order to build or evaluate intelligent systems beyond human intelligence. Because of this, several test alternatives that would be able to evaluate superintelligent systems have been proposed.[66][67][68]

Turing, for his part, never intended his test to be used as a practical, day-to-day measure of the intelligence of AI programs; he wanted to provide a clear and understandable example to aid in the discussion of the philosophy of artificial intelligence.[69] As such, it is not surprising that the Turing test has had so little influence on AI research — the philosophy of AI, writes John McCarthy, "is unlikely to have any more effect on the practice of AI research than philosophy of science generally has on the practice of science."[70]

Predictions

Turing predicted that machines would eventually be able to pass the test; in fact, he estimated that by the year 2000, machines with 109 bits (about 119.2 MiB or approximately 120 megabytes) of memory would be able to fool thirty percent of human judges in a five-minute test. He also predicted that people would then no longer consider the phrase "thinking machine" contradictory. He further predicted that machine learning would be an important part of building powerful machines, a claim considered plausible by contemporary researchers in artificial intelligence.

In a paper submitted to 19th Midwest Artificial Intelligence and Cognitive Science Conference, Dr.Shane T. Mueller predicted a modified Turing Test called a "Cognitive Decathlon" could be accomplished within 5 years.[71]

By extrapolating an exponential growth of technology over several decades, futurist Raymond Kurzweil predicted that Turing test-capable computers would be manufactured in the near future. In 1990, he set the year around 2020.[72] By 2005, he had revised his estimate to 2029.[73]

The Long Bet Project is a wager of $20,000 between Mitch Kapor (pessimist) and Kurzweil (optimist) about whether a computer will pass a Turing Test by the year 2029. The bet specifies the conditions in some detail.[74]

Variations of the Turing test

Numerous other versions of the Turing test, including those expounded above, have been mooted through the years.

Reverse Turing test and CAPTCHA

A modification of the Turing test wherein the objective of one or more of the roles have been reversed between machines and humans is termed a reverse Turing test. An example is implied in the work of psychoanalyst Wilfred Bion,[75] who was particularly fascinated by the "storm" that resulted from the encounter of one mind by another. Carrying this idea forward, R. D. Hinshelwood[76] described the mind as a "mind recognizing apparatus," noting that this might be some sort of "supplement" to the Turing test. The challenge would be for the computer to be able to determine if it were interacting with a human or another computer. This is an extension of the original question that Turing attempted answer but would, perhaps, offer a high enough standard to define a machine that could "think" in a way that we typically define as characteristically human.

CAPTCHA is a form of reverse Turing test. Before being allowed to perform some action on a website, the user is presented with alphanumerical characters in a distorted graphic image and asked to type them out. This is intended to prevent automated systems from being used to abuse the site. The rationale is that software sufficiently sophisticated to read and reproduce the distorted image accurately does not exist (or is not available to the average user), so any system able to do so is likely to be a human.

Software that can reverse CAPTCHA with some accuracy by analyzing patterns in the generating engine is being actively developed.[77]

"Fly on the wall" Turing test

The "fly on the wall" variation of the Turing test changes the original Turing-test parameters in three ways. First, parties A and B communicate with each other rather than with party C, who plays the role of a detached observer ("fly on the wall") rather than of an interrogator or other participant in the conversation. Second, party A and party B may each be either a human or a computer of the type being tested. Third, it is specified that party C must not be informed as to the identity (human versus computer) of either participant in the conversation. Party C's task is to determine which of four possible participant combinations (human A/human B, human A/computer B, computer A/human B, computer A/computer B) generated the conversation. At its most rigorous, the test is conducted in numerous iterations, in each of which the identity of each participant is determined at random (e.g., using a fair-coin toss) and independently of the determination of the other participant's identity, and in each of which a new human observer is used (to prevent the discernment abilities of party C from improving through conscious or unconscious pattern recognition over time). The computer passes the test for human-level intelligence if, over the course of a statistically significant number of iterations, the respective parties C are unable to determine with better-than-chance frequency which participant combination generated the conversation.

The "fly on the wall" variation increases the scope of intelligence being tested in that the observer is able to evaluate not only the participants' ability to answer questions but their capacity for other aspects of intelligent communication, such as the generation of questions or comments regarding an existing aspect of a conversation subject ("deepening"), the generation of questions or comments regarding new subjects or new aspects of the current subject ("broadening"), and the ability to abandon certain subject matter in favor of other subject matter currently under discussion ("narrowing") or new subject matter or aspects thereof ("shifting").

The Bion-Hinshelwood extension of the traditional test is applicable to the "fly on the wall" variation as well, enabling the testing of intellectual functions involving the ability to recognize intelligence: If a computer placed in the role of party C (reset after each iteration to prevent pattern recognition over time) can identify conversation participants with a success rate equal to or higher than the success rate of a set of humans in the party-C role, the computer is functioning at a human level with respect to the skill of intelligence recognition.

Subject matter expert Turing test

Another variation is described as the subject matter expert Turing test, where a machine's response cannot be distinguished from an expert in a given field. This is also known as a "Feigenbaum test" and was proposed by Edward Feigenbaum in a 2003 paper.[78]

Immortality test

The Immortality-test variation of the Turing test would determine if a person's essential character is reproduced with enough fidelity to make it impossible to distinguish a reproduction of a person from the original person.

Minimum Intelligent Signal Test

The Minimum Intelligent Signal Test, proposed by Chris McKinstry, is another variation of Turing's test, where only binary responses are permitted. It is typically used to gather statistical data against which the performance of artificial intelligence programs may be measured.

Meta Turing test

Yet another variation is the Meta Turing test, in which the subject being tested (say, a computer) is classified as intelligent if it has created something that the subject itself wants to test for intelligence.

Hutter Prize

The organizers of the Hutter Prize believe that compressing natural language text is a hard AI problem, equivalent to passing the Turing test.

The data compression test has some advantages over most versions and variations of a Turing test, including:

- It gives a single number that can be directly used to compare which of two machines is "more intelligent."

- It does not require the computer to lie to the judge

The main disadvantages of using data compression as a test are:

- It is not possible to test humans this way.

- It is unknown what particular "score" on this test—if any—is equivalent to passing a human-level Turing test.

See also

- Artificial intelligence in fiction

- Graphics Turing Test

- HAL 9000 (from 2001: A Space Odyssey)

- Mark V Shaney (USENET bot)

- Simulated reality

- Technological singularity

- Uncanny valley

- Voight-Kampff machine

- CAPTCHA

- SHRDLU

Notes

- ↑ Saygin 2000

- ↑ Turing originally suggested a teleprinter, one of the few text-only communication systems available in 1950. (Turing 1950, p. 433)

- ↑ 3.0 3.1 3.2 Turing 1950, p. 433

- ↑ (Turing 1950, p. 442) This particular version of the test was called "The Imitation Game". Turing continues with a more technical version: "these questions [are] equivalent to this, 'Let us fix our attention on one particular digital computer C. Is it true that by modifying this computer to have an adequate storage, suitably increasing its speed of action, and providing it with an appropriate programme, C can be made to play satisfactorily the part of A in the imitation game, the part of B being taken by a man?'" (Turing 1950, p. 442)

- ↑ 5.0 5.1 Turing 1950, p. 442-454 and see Russell & Norvig (2003, p. 948), where they comment, "Turing examined a wide variety of possible objections to the possibility of intelligent machines, including virtually all of those that have been raised in the half century since his paper appeared."

- ↑ Saygin, Cicekli & Akman 2000

- ↑ Russell & Norvig 2003, pp. 2–3 and 948

- ↑ Gibb, Barry (2007), The Rough Guide to the Brain, London: Rough Guides Ltd., pp. 236

- ↑ For an example of property dualism, see Qualia.

- ↑ Noting that materialism does not necessitate the possibility of artificial minds (for example, Roger Penrose), any more than dualism necessarily precludes the possibility. (See, for example, Property dualism.)

- ↑ Language, Truth and Logic (p. 140), Penguin 2001.

- ↑ The Dartmouth conferences of 1956 are widely considered the "birth of AI". (Crevier 1993, p. 49)

- ↑ McCorduck 2004, p. 95

- ↑ Copeland 2003, p. 1

- ↑ Copeland 2003, p. 2

- ↑ Turing 1948, p. 412

- ↑ In 1948, working with his former undergraduate colleague, DG Champernowne, Turing began writing a chess program for a computer that did not yet exist and, in 1952, lacking a computer powerful enough to execute the program, played a game in which he simulated it, taking about half an hour over each move. The game was recorded, and the program lost to Turing's colleague Alick Glennie, although it is said that it won a game against Champernowne's wife.

- ↑ "Intelligent Machinery" was not published by Turing, and did not see publication until 1968 in Evans, C. R. & Robertson, A. D. J. (1968) Cybernetics: Key Papers, University Park Press.

- ↑ Harnad, p. 1

- ↑ 20.0 20.1 20.2 20.3 20.4 Turing 1950, p. 434

- ↑ Turing 1950, p. 446

- ↑ Turing 1952, pp. 524–525. Turing does not seem to distinguish between "man" as a gender and "man" as a human. In the former case, this formulation would be closer to the Imitation Game, whereas in the latter it would be closer to current depictions of the test.

- ↑ Whitby 1996, p. 53

- ↑ Weizenbaum 1966, p. 37

- ↑ 25.0 25.1 25.2 Weizenbaum 1966, p. 42

- ↑ Thomas 1995, p. 112

- ↑ Bowden 2006, p. 370

- ↑ Colby et al. 1972, p. 42

- ↑ Saygin, Cicekli & Akman 2000, p. 501

- ↑ 30.0 30.1 Searle 1980

- ↑ Saygin, Cicekli & Akman 2000, p. 479

- ↑ Sundman 2003

- ↑ 33.0 33.1 33.2 "Artificial Stupidity" 1992

- ↑ 34.0 34.1 34.2 Shapiro 1992, p. 10-11 and Shieber 1994, amongst others.

- ↑ 35.0 35.1 Shieber 1994, p. 77

- ↑ See [1].

- ↑ http://www.igi-global.com/reference/details.asp?ID=34432&v=tableOfContents Edited By: Jordi Vallverdú, Universitat Autònoma de Barcelona, Spain; David Casacuberta , Universitat Autonoma de Barcelona, Spain

- ↑ Shah, Huma (15 January 2009). "Winner of 2008 Loebner Prize for Artificial Intelligence". Conversation, Deception and Intelligence. http://humashah.blogspot.com/. Retrieved 29 March 2009.

- ↑ . Results and report can be found here [2]. Transcripts can be found at "Loebner Prize". http://www.loebner.net/Prizef/loebner-prize.html. Retrieved 29 March 2009.

- ↑ "ALICE Anniversary and Colloquium on Conversation". A.L.I.C.E. Artificial Intelligence Foundation. http://www.alicebot.org/bbbbbbb.html. Retrieved 29 March 2009.

- ↑ "Loebner Prize 2008". University of Reading. http://www.reading.ac.uk/cirg/loebner/cirg-loebner-main.asp. Retrieved 29 March 2009.

- ↑ "AISB 2008 Symposium on the Turing Test". Society for the Study of Artificial Intelligence and the Simulation of Behaviour. http://www.aisb.org.uk/events/turingevent.shtml. Retrieved 29 March 2009.

- ↑ Saygin 2000

- ↑ 44.0 44.1 44.2 Traiger 2000

- ↑ 55. Saygin, A.P. (2008). Comments on “Computing Machinery and Intelligence” by Alan Turing. In R.Epstein, G. Roberts, G. Poland, (eds.) Parsing the Turing Test. Springer: Dordrecht, Netherlands

- ↑ Saygin 2000

- ↑ 47.0 47.1 47.2 Moor 2003

- ↑ Saygin 2000

- ↑ Saygin 2000

- ↑ Traiger 2000, p. 99

- ↑ Sterrett 2000

- ↑ Genova 1994, Hayes & Ford 1995, Heil 1998, Dreyfus 1979

- ↑ R.Epstein, G. Roberts, G. Poland, (eds.) Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer. Springer: Dordrecht, Netherlands

- ↑ Colby et al. 1972

- ↑ Saygin 2000

- ↑ http://www.rdg.ac.uk/research/Highlights-News/featuresnews/res-featureloebner.asp Can a machine think? -Results from the 18th Loebner Prize, University of Reading 2008

- ↑ Saygin, A.P. & Cicekli, I. (2002) Pragmatics in human-computer conversation. Journal of Pragmatics, 34(3): 227-258

- ↑ Turing 1950 under "Critique of the New Problem"

- ↑ Haugeland 1985, p. 8

- ↑ "These six disciplines," write Stuart J. Russell and Peter Norvig, "represent most of AI." Russell & Norvig 2003, p. 3

- ↑ Saygin, A.P. & Cicekli, I. (2002). Journal of Pragmatics, 34, 227-258.

- ↑ Turing 1950, p. 448

- ↑ Russell & Norvig (2003, pp. 958–960) identify Searle's argument with the one Turing answers.

- ↑ Shermer YEAR? CITATION IN PROGRESS

- ↑ 65.0 65.1 Russell & Norvig 2003, p. 3

- ↑ Jose Hernandez-Orallo (2000), "Beyond the Turing Test", Journal of Logic, Language and Information 9 (4): 447–466, doi:10.1023/A:1008367325700, http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.44.8943, retrieved 2009-07-21.

- ↑ D L Dowe and A R Hajek (1997), "A computational extension to the Turing Test", Proceedings of the 4th Conference of the Australasian Cognitive Science Society, http://www.csse.monash.edu.au/publications/1997/tr-cs97-322-abs.html, retrieved 2009-07-21.

- ↑ Shane Legg and Marcus Hutter (2007), "Universal Intelligence: A Definition of Machine Intelligence" (PDF), Minds and Machines 17: 391–444, doi:10.1007/s11023-007-9079-x, http://www.vetta.org/documents/UniversalIntelligence.pdf, retrieved 2009-07-21.

- ↑ Turing 1950, under the heading "The Imitation Game," where he writes, "Instead of attempting such a definition I shall replace the question by another, which is closely related to it and is expressed in relatively unambiguous words."

- ↑ John McCarthy The philosophy of artificial intelligence

- ↑ Shane T. Mueller, Ph.D. (2008), [http://www.dod.mil/pubs/foi/darpa/08_F_0799Is_the_Turing_test_Still_Relevant.pdf "Is the Turing Test Still Relevant? A Plan for Developing the Cognitive Decathlon to Test Intelligent Embodied Behavior"], Paper submitted to the 19th Midwest Artificial Intelligence and Cognitive Science Conference: 8pp, http://www.dod.mil/pubs/foi/darpa/08_F_0799Is_the_Turing_test_Still_Relevant.pdf, retrieved 2010-09-08

- ↑ Kurzweil 1990

- ↑ Kurzweil 2005

- ↑ Long Bets - By 2029 no computer - or "machine intelligence" - will have passed the Turing Test

- ↑ Bion 1979

- ↑ Hinshelwood 2001

- ↑ http://www.cs.sfu.ca/~mori/research/gimpy/

- ↑ McCorduck 2003, pp. 503–505, Feigenbaum 2003. The subject matter expert test is also mentioned in Kurzweil (2005)

References

- "Artificial Stupidity", The Economist 324 (7770): 14, 1992-09-01

- Bion, W.S. (1979), "Making the best of a bad job", Clinical Seminars and Four Papers, Abingdon: Fleetwood Press.

- Bowden, Margaret A. (2006), Mind As Machine: A History of Cognitive Science, Oxford University Press, ISBN 9780199241446

- Colby, K. M.; Hilf, F. D.; Weber, S.; Kraemer, H. (1972), "Turing-like indistinguishability tests for the validation of a computer simulation of paranoid processes", Artificial Intelligence 3: 199–221, doi:10.1016/0004-3702(72)90049-5

- Copeland, Jack (2003), Moor, James, ed., "The Turing Test", The Turing Test: The Elusive Standard of Artificial Intelligence (Springer), ISBN 1-40-201205-5

- Crevier, Daniel (1993), AI: The Tumultuous Search for Artificial Intelligence, New York, NY: BasicBooks, ISBN 0-465-02997-3

- Dreyfus, Hubert (1979), What Computers Still Can't Do, New York: MIT Press, ISBN ISBN 0-06-090613-8

- Feigenbaum, Edward A. (2003), "Some challenges and grand challenges for computational intelligence", Journal of the ACM 50 (1): 32–40, doi:10.1145/602382.602400

- Genova, J. (1994), "Turing's Sexual Guessing Game", Social Epistemology 8 (4): 314–326, doi:10.1080/02691729408578758

- Harnad, Stevan (2004), "The Annotation Game: On Turing (1950) on Computing, Machinery, and Intelligence", in Epstein, Robert; Peters, Grace, The Turing Test Sourcebook: Philosophical and Methodological Issues in the Quest for the Thinking Computer, Klewer, http://cogprints.org/3322/

- Haugeland, John (1985), Artificial Intelligence: The Very Idea, Cambridge, Mass.: MIT Press.

- Hayes, Patrick; Ford, Kenneth (1995), "Turing Test Considered Harmful", Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence (IJCAI95-1), Montreal, Quebec, Canada.: 972–997

- Heil, John (1998), Philosophy of Mind: A Contemporary Introduction, London and New York: Routledge, ISBN 0-415-13060-3

- Hinshelwood, R.D. (2001), Group Mentality and Having a Mind: Reflections on Bion's work on groups and on psychosis

- Kurzweil, Ray (1990), The Age of Intelligent Machines, Cambridge, Mass.: MIT Press, ISBN 0-262-61079-5

- Kurzweil, Ray (2005), The Singularity is Near, Penguin Books, ISBN 0-670-03384-7

- Loebner, Hugh Gene (1994), "In response", Communications of the ACM 37 (6): 79–82, doi:10.1145/175208.175218, http://loebner.net/Prizef/In-response.html, retrieved 2008-03-22

- McCorduck, Pamela (2004), Machines Who Think (2nd ed.), Natick, MA: A. K. Peters, Ltd., ISBN 1-56881-205-1, http://www.pamelamc.com/html/machines_who_think.html

- Moor, James, ed. (2003), The Turing Test: The Elusive Standard of Artificial Intelligence, Dordrecht: Kluwer Academic Publishers, ISBN 1-4020-1205-5

- Penrose, Roger (1989), The Emperor's New Mind: Concerning Computers, Minds, and The Laws of Physics, Oxford University Press, ISBN 0-14-014534-6

- Russell, Stuart J.; Norvig, Peter (2003), Artificial Intelligence: A Modern Approach (2nd ed.), Upper Saddle River, NJ: Prentice Hall, ISBN 0-13-790395-2

- Saygin, A. P. (2000), "Turing Test: 50 Years Later", Minds and Machines 10 (4): 463–518, doi:10.1023/A:1011288000451, http://crl.ucsd.edu/~saygin/papers/MMTT.pdf.

- Saygin, A. P.; Cicekli, I.; Akman, V. (2000), "Turing Test: 50 Years Later", Minds and Machines 10 (4): 463–518, doi:10.1023/A:1011288000451, http://crl.ucsd.edu/~saygin/papers/MMTT.pdf. Reprinted in Moor, James H., ed. (2003), The Turing Test: The Elusive Standard of Artificial Intelligence, Kluwer Academic, pp. 23–78, ISBN 1-4020-1205-5.

- Saygin, A. P.; Cicekli, I. (2002), "Pragmatics in human-computer conversation", Journal of Pragmatics 34 (3): 227-258.

- Searle, John (1980), "Minds, Brains and Programs", Behavioral and Brain Sciences 3 (3): 417–457, doi:10.1017/S0140525X00005756, http://members.aol.com/NeoNoetics/MindsBrainsPrograms.html. Page numbers above refer to a standard pdf print of the article. See also Searle's original draft.

- Shah, Huma; Warwick, Kevin (2010), Testing Turing's Five Minutes Parallel-paired Imitation Game in Kybernetes Turing Test Special Issue 4, April 2010

- Shah, Huma; Warwick, Kevin (2009), Emotion in the Turing Test: A Downward Trend for Machines in Recent Loebner Prizes in Vallverdú, Jordi; Casacuberta, David, eds. (2009), Handbook of Research on Synthetic Emotions and Sociable Robotics: New Applications in Affective Computing and Artificial Intelligence, Information Science, IGI, ISBN 978-1-60566-354-8

- Shapiro, Stuart C. (1992), "The Turing Test and the economist", ACM SIGART Bulletin 3 (4): 10–11, doi:10.1145/141420.141423

- Shieber, Stuart M. (1994), "Lessons from a Restricted Turing Test", Communications of the ACM 37 (6): 70–78, doi:10.1145/175208.175217, http://www.eecs.harvard.edu/shieber/Biblio/Papers/loebner-rev-html/loebner-rev-html.html, retrieved 2008-03-25

- Sterrett, S. G. (2000), "Turing's Two Test of Intelligence", Minds and Machines 10 (4): 541, doi:10.1023/A:1011242120015 (reprinted in The Turing Test: The Elusive Standard of Artificial Intelligence edited by James H. Moor, Kluwer Academic 2003) ISBN 1-4020-1205-5

- Sundman, John (February 26, 2003), "Artificial stupidity", Salon.com, http://dir.salon.com/story/tech/feature/2003/02/26/loebner_part_one/index.html, retrieved 2008-03-22

- Thomas, Peter J. (1995), The Social and Interactional Dimensions of Human-Computer Interfaces, Cambridge University Press, ISBN 052145302X

- Traiger, Saul (2000), "Making the Right Identification in the Turing Test", Minds and Machines 10 (4): 561, doi:10.1023/A:1011254505902 (reprinted in The Turing Test: The Elusive Standard of Artificial Intelligence edited by James H. Moor, Kluwer Academic 2003) ISBN 1-4020-1205-5

- Turing, Alan (1948), "Machine Intelligence", in Copeland, B. Jack, The Essential Turing: The ideas that gave birth to the computer age, Oxford: Oxford University Press, ISBN 0-19-825080-0

- Turing, Alan (October 1950), "Computing Machinery and Intelligence", Mind LIX (236): 433–460, doi:10.1093/mind/LIX.236.433, ISSN 0026-4423, http://loebner.net/Prizef/TuringArticle.html, retrieved 2008-08-18

- Turing, Alan (1952), "Can Automatic Calculating Machines be Said to Think?", in Copeland, B. Jack, The Essential Turing: The ideas that gave birth to the computer age, Oxford: Oxford University Press, ISBN 0-19-825080-0

- Zylberberg, A.; Calot, E. (2007), "Optimizing Lies in State Oriented Domains based on Genetic Algorithms", Proceedings VI Ibero-American Symposium on Software Engineering: 11–18, ISBN 978-9972-2885-1-7

- Weizenbaum, Joseph (January 1966), "ELIZA - A Computer Program For the Study of Natural Language Communication Between Man And Machine", Communications of the ACM 9 (1): 36–45, doi:10.1145/365153.365168

- Whitby, Blay (1996), "The Turing Test: AI's Biggest Blind Alley?", in Millican, Peter & Clark, Andy, Machines and Thought: The Legacy of Alan Turing, 1, Oxford University Press, pp. 53–62, ISBN 0-19-823876-2

- Adams, Scott (2008), Dilbert, http://www.dilbert.com/comics/dilbert/archive/images/dilbert2008033349280.jpg

Further reading

- Cohen, Paul R. (2006), "'If Not Turing's Test, Then What?", AI Magazine 26 (4), http://www.cs.arizona.edu/~cohen/Publications/papers/IfNotWhat.pdf.

External links

- Turing Test Page

- The Turing Test - an Opera by Julian Wagstaff

- Turing Test at the Open Directory Project

- The Turing Test- How accurate could the turing test really be?

- Stanford Encyclopedia of Philosophy entry on the Turing test, by G. Oppy and D. Dowe.

- Turing Test: 50 Years Later reviews a half-century of work on the Turing Test, from the vantage point of 2000.

- Bet between Kapor and Kurzweil, including detailed justifications of their respective positions.

- Why The Turing Test is AI's Biggest Blind Alley by Blay Witby

- TuringHub.com Take the Turing Test, live, online

- Jabberwacky.com An AI chatterbot that learns from and imitates humans

- New York Times essays on machine intelligence part 1 and part 2

- Machines Who Think": Scientific American Frontiers video on "the first ever [restricted] Turing test."

- Wiki News: "Talk:Computer professionals celebrate 10th birthday of A.L.I.C.E."